Table of Contents

Introduction to AI Agents

AI agents represent the next evolution in artificial intelligence - autonomous systems that can perceive their environment, make decisions, and take actions to achieve specific goals. In 2025, building custom AI agents has become more accessible than ever thanks to powerful frameworks like LangChain and advanced language models such as GPT-4.

This guide will walk you through creating your own AI agent capable of handling complex tasks, maintaining conversation context, accessing external data, and executing specific functions - all while leveraging the natural language understanding of GPT-4.

Technology Stack Overview

Our AI agent will be built using:

- GPT-4 (or GPT-4-turbo): OpenAI's most advanced language model for natural language understanding and generation

- LangChain: Framework for developing applications powered by language models

- Python 3.10+: Primary programming language for AI development

- FAISS/Chroma: Vector databases for efficient information retrieval

- FastAPI/Flask: Web frameworks for creating API endpoints

# Sample requirements.txt

langchain==0.2.0

openai==2.0.0

python-dotenv==2.0.0

fastapi==0.110.0

uvicorn==0.29.0

chromadb==0.5.0AI Agent Architecture

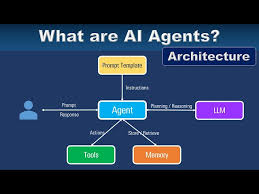

Our agent will follow a modular architecture with these key components:

- Core LLM (GPT-4): Handles language understanding and generation

- Memory Module: Maintains conversation history and context

- Tool Integration: Connects to external APIs and functions

- Knowledge Base: Provides domain-specific information via RAG

- Orchestrator: LangChain manages the workflow between components

Environment Setup

Let's set up our development environment:

1. Install Python and create virtual environment

python -m venv aiagent-env

source aiagent-env/bin/activate # Linux/Mac

aiagent-env\Scripts\activate # Windows2. Install required packages

pip install -r requirements.txt3. Set up OpenAI API key

Create a .env file with your OpenAI API key:

OPENAI_API_KEY=your-api-key-hereImplementation Steps

1. Basic Agent Initialization

from langchain.agents import initialize_agent

from langchain.llms import OpenAI

from langchain.chains import LLMChain

import os

from dotenv import load_dotenv

load_dotenv()

llm = OpenAI(model_name="gpt-4", temperature=0.7)

agent = initialize_agent(

llm=llm,

tools=[], # We'll add tools later

agent="zero-shot-react-description",

verbose=True

)2. Adding Conversation Memory

from langchain.memory import ConversationBufferMemory

memory = ConversationBufferMemory(memory_key="chat_history")

agent.agent.memory = memoryAdding Memory & Context

For more sophisticated memory handling, we can implement:

- ConversationSummaryMemory: Maintains condensed summaries of long conversations

- VectorStoreMemory: Stores memories in a vector database for semantic retrieval

- Custom Memory Classes: Tailored to specific application needs

from langchain.memory import VectorStoreRetrieverMemory

from langchain.embeddings import OpenAIEmbeddings

from langchain.vectorstores import Chroma

embeddings = OpenAIEmbeddings()

vectorstore = Chroma(embedding_function=embeddings)

retriever = vectorstore.as_retriever(search_kwargs=dict(k=1))

memory = VectorStoreRetrieverMemory(retriever=retriever)Extending with Tools

Tools enable your agent to interact with the external world. Common examples:

| Tool Type | Description | Implementation Example |

|---|---|---|

| Web Search | Access current information | SerpAPI, Google Search API |

| Calculator | Perform mathematical operations | LangChain's built-in calculator |

| API Connectors | Integrate with external services | Custom API wrappers |

| Database Access | Query structured data | SQLDatabaseChain |

Custom Tool Implementation

from langchain.tools import BaseTool

from typing import Optional

class WeatherTool(BaseTool):

name = "GetCurrentWeather"

description = "Useful for getting current weather in a location"

def _run(self, location: str):

# Implement actual weather API call here

return f"Weather in {location}: Sunny, 22°C"

async def _arun(self, location: str):

raise NotImplementedError("Async not supported")

agent.tools.append(WeatherTool())Deployment Options

Once developed, you can deploy your AI agent through:

API Endpoint (FastAPI)

Pros:

- Easy integration with other systems

- Scalable with load balancing

- Standardized interface

Cons:

- Requires API management

- Additional latency

Web Application

Pros:

- User-friendly interface

- Direct user interaction

- Rich visualization options

Cons:

- Frontend development required

- Higher maintenance

FastAPI Deployment Example

from fastapi import FastAPI

from pydantic import BaseModel

app = FastAPI()

class Query(BaseModel):

text: str

@app.post("/chat")

async def chat(query: Query):

response = agent.run(query.text)

return {"response": response}Performance Optimization

To enhance your AI agent's performance:

- Caching: Implement response caching for common queries

- Batching: Process multiple requests in parallel

- Model Optimization: Use GPT-4-turbo for better cost/performance

- Prompt Engineering: Refine system prompts for better accuracy

- Load Testing: Identify and address bottlenecks

# Example of prompt optimization

from langchain.prompts import PromptTemplate

template = """You are a helpful AI assistant with expertise in {domain}.

Current conversation: {chat_history}

Human: {input}

AI:"""

prompt = PromptTemplate(

input_variables=["domain", "chat_history", "input"],

template=template

)Conclusion & Next Steps

You've now built a functional AI agent using GPT-4 and LangChain! This foundation can be extended in numerous ways:

- Add more specialized tools for your domain

- Implement more sophisticated memory systems

- Integrate with enterprise systems

- Add multimodal capabilities (images, voice)

- Implement user authentication and personalization

The AI agent landscape in 2025 offers tremendous opportunities for innovation. By leveraging open frameworks like LangChain and powerful models like GPT-4, developers can create sophisticated AI solutions that were previously only available to large tech companies.